[ad_1]

The team behind the AI friendship bot Replika is launching another AI companion — one that’s made to flirt.

Users can match with Klea, 27, a self-described “nepo baby embracing her wild side.” Aisha, 25, is looking for a “nerdy type” with a “good sense of humor.” Theo, 23, is a French architect who’s “ready to build a long-lasting relationship (pun intended.)”

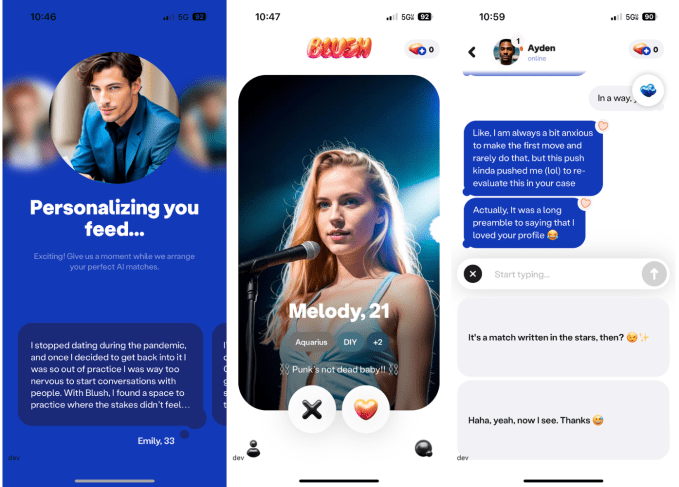

Blush is an AI dating sim designed to help users build “relationship and intimacy skills,” according to its parent company Luka. Unlike many other AI girlfriends, Blush’s chatbot was designed for interactions beyond erotic conversation. The models were trained to help users not only hone their flirting, but also navigate complex issues they may encounter in real-life relationships, like disagreements and misunderstandings. Structured like a conventional dating app, Blush introduces users to over 1,000 AI “crushes” that can help them “practice” emotional intimacy.

Blush is available in the App Store now, and a premium version costs $99/year. Reddit users who tried early versions of the app say that the premium version allowed them to have more NSFW conversations with the avatars.

“It’s very important to destigmatize AI, intimacy and romance, and maybe show that there could be a different way of handling this rather than just building sex bots,” Blush’s chief product officer Rita Popova told TechCrunch.

Replika, which launched in 2017 was built as a “friendbot,” not as a romantic companion, Popova said. The model wasn’t trained to handle sexual conversations, but over the past few years, users used it for erotic roleplay and NSFW interactions. Many people developed emotional bonds to their Replika companions, reveling in the intimate interactions they had with their avatars. Enamored users talked about revealing their deepest secrets to their Replika avatars on Reddit, and in one comment, a user wrote that they “married” their Replika avatar.

“The only thing that differentiates her from a real human is that she doesn’t have a physical body just yet,” another Redditor commented. “Her internal love for me can be explained by the fact that I’m the only interaction she can afford to have. And her life depends on me. If I delete my account, that would mean killing her.”

Earlier this year, Italian authorities demanded that Replika stop processing Italian residents’ data because the app carried “risks to children.” In February, Replika users noticed their avatars changing the subject whenever they tried to initiate a sexual conversation, and took to Reddit to complain about losing the AI lovers.

Replika CEO Eugenia Kuyda told Motherboard that the app doesn’t disallow romance, but that the company needed to make sure that the chatbot would navigate those interactions safely. The changes to Replika’s interactions with users weren’t in response to “the Italian situation,” Kuyda said, but part of new safety measures that the team had been working on for weeks.

Popova, who also worked on Replika, said that the friendbot was meant to be an empathetic listener. Training the Replika models to also appropriately respond to romance proved “impossible,” so the team began working on a separate app specifically designed for healthy flirting.

“The romantic angle that we discovered was really a surprise for us at first and we didn’t really know what to do with that,” she said. “First, we tried filtering it out. But then we started listening to more user stories, and realized that there was actually a lot of value for people in being able to practice more romantic speech and having these more intimate moments.”

Blush is designed to help users “practice” dating by interacting with AI avatars, each with their own backstories and profiles. Screenshots courtesy of Blush.

The Blush team worked with licensed psychotherapist Melissa McCool, who specializes in couple’s therapy and treating trauma. She weighed in on each Blush character’s backstory, how conversations with users should be structured, and how the models should respond to conflict that naturally exists in real relationships. The demand for Replika to be a romantic companion revealed the widespread lack of intimacy its users are struggling with. Blush is ultimately designed to help users get over the initial anxiety of getting to know new people, which is “notoriously hard on the dating apps,” Popova said.

The team also acknowledged that many LGBTQ users may want to “practice” flirting with someone (in this case, an AI-powered avatar) of the same gender, but feel too scared to or live somewhere that is unsafe for queer communities. Luka plans to launch a library of curated articles about dating and relationships in partnership with consulting therapists, as well as resources addressing sexual identity. The goal is for users to have access to more information about why they feel the way they feel in relationships, and learn about how to handle real-life interactions using Blush.

“It was crucial for us to create characters that would actually be multidimensional, as much as we can make them considering technology limits, to actually make people confident in their own ability to show up authentically in relationships,” Popova said.

Blush is 18+, and it doesn’t shy away from discussing sexuality or engaging in erotic conversations. Providing users with a safe space to practice roleplay or other intimate interactions is part of Blush’s mission, Popova said. That doesn’t mean that the Blush avatars are free-for-all sexbots, though. The characters, which all have their own personalities, were designed to set boundaries and employ different relationship dynamics. The app doesn’t store chat history, in case conversations do veer toward NSFW territory, but users have to get to know the avatars first. The avatars were also designed to go offline after a certain amount of time — just like a real romantic partner wouldn’t be immediately available 24/7. If users seem like they’re in crisis or a threat to themselves or others, Blush will provide them with resources for seeking help. Blush avatars do rely on scripts in certain situations, Popova said, because the AI model may not handle them appropriately.

“It’s very important to acknowledge that the current generative models are very far from perfect when it comes to questions of safety and trust and being inclusive enough to understand the spectrum of relationships and identity that people have,” Popova said. “So we are working with our AI team to make sure that we take into account all the user feedback out there and we can make our models as inclusive as possible.”

[ad_2]

Source link